This is the third iteration of the augmented reality solar system project. We posted an early version of this project using BuildAR framework that worked only on desktop computers in 2011. Then we added a Flash implementation of the same concept with downloadable lesson and source plans in 2012.

This is the third iteration of the augmented reality solar system project. We posted an early version of this project using BuildAR framework that worked only on desktop computers in 2011. Then we added a Flash implementation of the same concept with downloadable lesson and source plans in 2012.

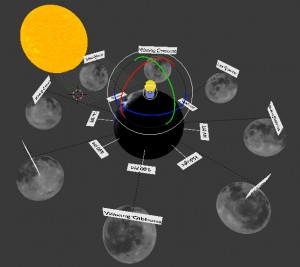

As we previously mentioned, we recently switched to Metaio framework which enables us to publish our projects as mobile applications (via free Junaio AR browser – app for iOS and Android) and desktop applications (downloadable standalone packages). This latest version of the Solar system comes with a redesigned book titled Augmented Reality Magic Book: Solar System. This book contains essential factual knowledge about the planets of the Solar system (NASA.gov, 2013), and it comes with a set of interactive AR markers that project multimedia content such as 3D models, videos, images, and audio. Each planet of the solar system has 2 markers: main marker with a 3D model of the planet, and another marker that contains supplementary content. The book is available for download for free in .pdf and .pub formats. The content is taken from NASA.gov, and if you have Microsoft Publisher, you are free to alter the content under this Creative Commons license (Attribution-NonCommercial 4.0 International).

Our intent is to make this book available to the general public, specifically K-12 teachers, parents, and students, with a goal to make learning more fun, engaging and constructive. This aligns with our ongoing goal to further explore the use of Augmented Reality in learning and education, and to provide broader community with free meaningful, useful and engaging AR content and framework.

Download the Augmented Reality Magic Book: Solar System: Publisher (.Pub) | Adobe Acrobat (.PDF)

Download desktop application: Win | Mac.

For mobile applications, you will need to download Junaio browser app from Apple store or Android Marketplace to your mobile devices.

Enjoy!